LLM gateway provides a central hub for accessing multiple models with added features like pre-processing and monitoring.

The rise of commercial LLMs like ChatGPT has transformed how businesses and industries integrate AI into their workflows. From UI/UX design to backend development and data analysis, AI, particularly LLMs and GenAI, has become essential for staying competitive. This rapid innovation has also led to a need for using multiple LLMs within the same project. Whether to compare performance, optimize costs, or swap between models during development and production.

As such, companies like OpenAI, Anthropic, Google, Meta, Mistral, etc., which have pioneered LLM development, offer their APIs to customers and developers for various use cases. However, integrating and managing various LLMs from different providers can be complex. This is where an LLM gateway steps in, offering a unified and streamlined solution to simplify the adoption and utilization of LLMs.

In this article we will discuss in detail:

What is an LLM Gateway and how is it helpful?Core features of LLM gatewaysAdvantages of implementing an LLM gatewayArchitecture overview of LLM gateway

So, let’s understand what an LLM gateway is.

What is an LLM Gateway?

An LLM Gateway is a middleware that connects user applications with the LLM service providers. This gateway streamlines the integration process and offers a single, unified interface to access and manage various LLMs.

In simple terms, an LLM Gateway acts as a service that receives requests from user applications, processes or batches them, and forwards them to the chosen LLM service provider. Once the LLM responds, the gateway collects the response, processes it if necessary, and delivers it back to the original requester.

This system not only simplifies communication between multiple LLMs and your application but also handles tasks like managing requests, optimizing performance, and ensuring security—all within one platform.

Gateway’s primary role is to handle requests and responses, ensuring seamless communication between applications and the chosen LLM. It provides features such as enhanced security, cost management, and performance optimization all in a single UI or SDK. Essentially, it simplifies complex interactions with multiple LLMs.

Imagine you run a customer service department. Let’s say you want to use the LLMs — A, B, and C — to help answer customer questions.

Without an LLM Gateway, you’d need to set up connections to A, B, and C separately. Also, external services like prompt management, cost monitoring, etc. need to be set up separately. Your team would have to learn how to use each one. They’d need different passwords and ways to send requests to each LLM. Moreover, setting up these connections with various components can be tedious, and maintaining them over time can be cumbersome.

Workflow without LLM Gateway | Source: Mastering LLM Gateway: A Developer’s Guide to AI Model Interfacing

With an LLM Gateway, you set up one connection for all the three LLMs – A, B, and C. Also, it doesn’t matter if the LLM uses internal or external APIs.

The gateway is designed to handle both internal LLMs (like Llama, Falcon, or models fine-tuned in-house) and external APIs (such as OpenAI, Google, or AWS Bedrock). The gateway manages connections to these LLM services for you, streamlining the integration process. Your team only needs to learn one system. They can use one set of passwords or credentials. They can send all requests through the gateway. All requests and responses are routed through the gateway, which simplifies adding additional LLMs or features in the future.

In a nutshell, the gateway does the hard work by

Picking the best LLM for each prompt by smart routing. Not only that you can also write conditional statements to route certain types of request to a specific LLMs. For instance, if you are requesting an LLM to “write a draft for an essay” then you can route it to GPT-4o. Requests related to research can be routed to Perplexity, for requests related to coding you can route to Claude Sonnet 3.5, and for requests related to reasoning you can route to OpenAI’s O1.Keeping track of costs.Making sure that customer data stays safe.Helping your system run faster.

As such, what features LLM gateway must have to perform efficiently so that users can manage various LLMs for their use cases?

Key features of LLM Gateway

In this section let’s discuss some of the key features and expand in detail what each of them offers.

Unified API

One of the most significant advantages of an LLM Gateway is its ability to provide a unified API. A unified API is a type of interface that allows users to access various LLMs from different service providers in one common interface.

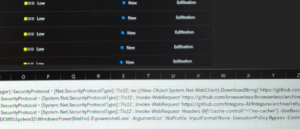

Comparing APIs from OpenAI above and Anthropic below | Source: Author

For instance, if you look at the image above, the API calling of OpenAI and Anthropic differ slightly. In any case, they are not similar. Similarly, APIs from different providers including open-source providers are written differently and called differently. With LLM Gateways these differences in calling APIs can be unified and generalized. This means the users don’t have to navigate elsewhere to switch between the LLMs. They can access LLM from one single place.

The unified API also allows users to maintain the same codebase to integrate various LLMs into their applications seamlessly. Also, it maintains consistency across the board.

Unified API allows developers to access a wide range of LLMs from different providers without explicitly understanding the intricacies of each provider’s specific API. Essentially, these APIs simplify the development process, reduce the learning curve, and accelerate the integration of LLMs into applications.

Centralized Key Management

We know that each LLM provider provides an API key through which we can access the LLM and its capabilities. If we are working with 5 different LLMs securely managing the API keys can be challenging because the API endpoints will differ. We don’t want to copy-paste the API keys every time we work on something new, A/B test different versions of the app, or even update the existing app.

An LLM Gateway addresses this challenge by providing a centralized system for managing these keys.

Example of managing multiple APIs | Source: liteLLM

For instance, in the image above, you can see how liteLLM allows you to set API keys using the environment variables. Once the desired API variables are stored you can call different LLMs using a single function call. For instance, you function call chatGPT and Claude-2 using the following scripts:

completion(model=”gpt-3.5-turbo”, messages=[{ “content”: “what’s the weather in SF”,”role”: “user”}])

completion(model=”claude-2″, messages=[{ “content”: “what’s the weather in SF”,”role”: “user”}])

This ensures better security and simplifies key administration.

Authentication and Attribution

In scenarios where multiple users need to access LLMs for various needs, it’s crucial to have a mechanism for authentication and usage tracking. For instance, in a computation neuroscience startup, there are various teams – mathematicians, neuroscientists, AI/ML engineers, HR, etc. Let’s say that each team requires an LLM to assist them in their work. A gateway in this scenario can help them to implement a role-based access control ensuring each team has a secure way to access the LLM. For example:

Neuroscientists can use LLM to review literature and summarize them to generate hypotheses and learn about recent experiments.AI/ML engineers can use LLM as a code assistant to develop new models.HR can use LLM to draft emails, manage resources, review resumes, etc.

LLM gateways make sure that each team is equipped with the necessary data and that there is no leakage of information from other teams.

Similarly, it logs and attributes the LLM usage for each team or individual. For example, if a mathematician uses LLM to generate a mathematical model then the gateway will log the prompts and token usage and attribute the same to the mathematics team. Likewise, it will do the same with other teams.

As such LLM gateways offer safe storage and management of API keys. They also provide centralized key management. They do this by protecting root keys and allocating unique keys to each developer or product for accountability.

A flowchart of TrueFoundry manages authentication of different users | Source: TrueFoundry

LLM Gateways provides features for authentication and attribution on a per-user and per-model basis. This ensures secure access and enables accurate tracking of LLM usage.

Dynamic Model Deployment

The ability to efficiently deploy, scale, and manage different LLM models is essential for organizations. In an LLM Gateway, dynamic model deployment allows models to be:

Loaded, deployed, and scaled automatically based on demand.Selected based on task-specific requirements, such as different models for text summarization, coding, or data analysis.Customized dynamically (eg., prompt engineering or lightweight parameter adjustments like LoRA or prefix tuning) to meet unique user needs.Deployed across different computing resources, optimizing for performance, cost, or speed depending on each task’s computational requirements.

Imagine a neuroscientist using an LLM for text summarization of the latest neuroscience papers. At the same time, the AI/ML team needs an LLM to help design a novel neural network model. You see, both teams can have their respective models deployed dynamically based on their needs. If the neuroscientist’s tasks are computationally light, the system can prioritize resources to the more computationally heavy task of the AI/ML engineers. When both tasks are completed, the gateway can shut down or scale down those models to save on resource costs.

Request/Response Processing

At its core, an LLM Gateway acts as an intermediary, efficiently processing requests from applications. It essentially does two main tasks:

Receive requests from teams or usersDeliver an appropriate response to the team

Now, let’s understand the intermediate steps involved in request and response processing:

Request submission: When the request is being submitted by the user to the LLM Gateway it checks who has submitted the request. Here the gateway identifies the user via an authentication mechanism.Routing process: If the authentication mechanism is accepted the gateway determines which LLM should handle the task. This involves routing the request to the appropriate preprocessing technique.Preprocessing: The request is then preprocessed and formatted before sending it to the model. For instance, if the query is big then the gateway breaks it into smaller chunks and sends it to the model.Modeling: The processed request is then sent to the model and the appropriate response is received which is then post-processed. The smaller chunks are aggregated, formatted, and made ready for delivery.Response delivery: Once the response is ready based on the users’ requirements it is then delivered. Request/Response processing flowchart | Source: Author

In scenarios where multiple teams work together on different tasks, the gateway might prioritize tasks as well. This happens based on the urgency and business goals. Also, frequently used requests or queries are cached to reduce processing time.

Traffic Routing

In cases where multiple LLM instances or providers are being used, an LLM Gateway can intelligently route traffic to ensure optimal performance and cost efficiency. This might involve directing requests to the most appropriate LLM based on factors like workload, availability, or cost.

For instance, let’s say that you are using OpenAI o1 and Claude Sonnet 3.5 to build a website project. Now both o1 and Sonnet 3.5 have a limited response or output generation capacity. Once you run out of responses there is a refractory period of one week in the case of o1 and approx. 2 hours in the case of Sonnet. So the idea here is to use both of these models effectively without crossing the response limit. When you register both of these models in an LLM gateway you can intelligently switch between the two models. This allows you to efficiently make use of the available responses.

The architecture of LLM gateways, especially when deployed in a distributed environment, plays a crucial role in enabling this dynamic routing.

Security & Compliance

Ensuring the security and privacy of data processed by LLMs is important. LLM Gateways can enforce security policies, encrypt sensitive information, and manage access control to protect data. It can comply with relevant regulations like GDPR or HIPAA. They act as a security layer, adding an extra level of protection when handling sensitive data.

Model and Cloud Agnosticism

Many LLM Gateways are designed to be model and cloud-agnostic. This means they can be used with various LLM providers and deployed across different cloud environments. This gives organizations the flexibility to choose the best LLM and deployment strategy for their needs.

Advantages of Implementing an LLM Gateway

Using an LLM gateway offers significant advantages by simplifying development, improving security, and enhancing overall performance. Let’s understand each of these points in detail.

Simplified Development and Maintenance

LLM gateways provide a unified interface for integrating multiple language models, eliminating the need to deal with different APIs from different providers. Because all the LLM can be accessed via a single interface it becomes easy to experiment with different ideas. This also reduces the complexity for developers, who can now focus on building features rather than the nuances of LLM integrations.

Developers can play with model-specific parameters like temperature, seed, max token, etc to develop task-specific models for different users or teams. As such, LLM Gateway also accelerates the development process with different models.

Also, when experimenting with different models or switching providers due to cost or performance, the gateway streamlines the process. This allows changes without the need to rewrite the application code or even the entire codebase. This flexibility also extends to centralized management of API keys, minimizing the exposure of sensitive data and enabling seamless updates across different applications. During maintenance or when adding new features this cuts down development time.

Improved Security and Compliance

We haven’t dwelt much on security in the previous section but here will discuss it slightly more as an advantage. Security and regulatory compliance are critical because when a user interacts with the gateway some information is shared as a request. Some information can be sensitive and private as well. This information must be encrypted and it must be handled carefully.

An LLM gateway acts as a centralized checkpoint, managing authentication, access control, and rate limiting for all LLM interactions. This setup enforces consistent security protocols across your AI applications.

For businesses in regulated sectors like healthcare or finance, LLM gateways can be equipped with additional layers of security, such as PII (Personally Identifiable Information) detection and audit logging, ensuring compliance with regulations such as GDPR or HIPAA. Furthermore, organizations can control which models or providers process specific queries, ensuring sensitive tasks are handled only by trusted, secure endpoints.

Enhanced Performance and Cost Efficiency

With smart routing and caching mechanisms, LLM gateways can significantly improve application performance while optimizing costs. Caching common queries reduces latency and the number of API calls to LLM providers. This not only enhances user experience but also lowers operational expenses.

Smart routing enables the selection of the most appropriate model based on various factors. These factors could be query type, cost, and performance requirements. This ensures a balance between speed and expenditure.

Smart routing also promotes load balancing. Load balancing across multiple models or providers ensures that resources are utilized efficiently, preventing the overuse of costly LLMs when simpler queries can be handled by less resource-intensive models.

Increased Service Reliability

Service reliability is another core advantage of using an LLM gateway. With automatic retries, failover mechanisms, and circuit breakers, the gateway ensures that temporary service interruptions or performance issues with specific LLM providers do not halt your entire application. This makes your AI applications more resilient to outages.

The gateway can also implement quality checks on responses, filtering out faulty or incomplete outputs, and ensuring that only relevant and high-quality responses are passed back to your application.

Simplified Debugging

Debugging complex AI-powered systems can be a tedious task, but an LLM gateway simplifies this with centralized logging and monitoring. Developers gain full visibility into all LLM interactions, including request and response payloads, error rates, latency, and usage trends. Advanced features like request tracing allow developers to follow the entire journey of a request through the system, pinpointing issues in real-time.

This is also effective as users and teams are attributed for the requests they make to the LLM. This streamlined debugging process speeds up troubleshooting and minimizes downtime, ensuring that your systems remain operational and efficient.

Better Cost Visibility and Usage Monitoring

An often-overlooked advantage of LLM gateways is the comprehensive insight they provide into costs and usage. By acting as a centralized hub for all LLM interactions, gateways offer detailed reporting on token usage, enabling organizations to track AI expenditure across different models and providers.

With built-in dashboards, teams can identify patterns in usage and costs, revealing opportunities for optimization. For example, certain workflows may be consuming disproportionate resources, prompting a switch to more cost-effective models. This level of visibility helps both finance and engineering teams make informed decisions about budgeting and scaling AI systems efficiently.

Architectural Overview of LLM Gateway

Before we learn how LLM Gateways work let’s learn about LLM Proxy as it will give you a better understanding of what Gateways is and how it works. So what is an LLM Proxy?

LLM Proxy

An LLM Proxy is a server that connects a client and a LLM service provider like the gateway. You can say that gateways are an upgraded version of proxy with additional features. The core idea of the two is that they balance the load and traffic routing.

Proxies are best at routing requests, load balancing, and managing communication between users and LLMs. They also help in abstracting details, meaning the user is not necessarily aware of which model is serving their requests. Moreover, they offer a basic control over traffic flow.

So what is the use of a proxy?

As it turns out you don’t need a proxy or an intermediary to send a request to LLMs and get a response from it. You can use any LLM API without any proxy or intermediary and get the work done. But the reason you use a proxy or intermediary is to have the flexibility to change the LLM provider at your will, when the primary LLM is not working because of network issues, or when you have crossed the quota limits.

API working without a proxy | Source: Medium

During such a case you want to quickly switch to another LLM, which is available until the network is restored or the refractory period to redeem or quota is over. This is where the LLM proxy helps you. It takes requests from the user and sends them to the required LLM provided via their API.

Workflow of a proxy | Source: Tensorop

But proxy has an issue. It creates a bottleneck as it receives requests from the client or user and responses from the LLM. The bottleneck worsens when multiple clients are involved. This is when the issue of routing, security, authentication, attribution, etc. arises.

To handle this bottleneck you can use a proxy that can be containerized. In this way, multiple copies of the proxy can be created with the same configuration, and depending on the request and response traffic from single or multiple clients it can be routed and even scaled.

Containerization of the proxies with similar configuration for scalability | Source: Tensorop

For instance, in the image above you will find that the proxy servers are enclosed in a container. The container is deployed in the Kubernetes cluster which can autoscale based on the (client and request) load using the automatic load balancer. The load balancer works on certain policies. Once the conditions are met according to the policy statements, the proxy can be scaled horizontally.

This is a good way to scale the proxy servers based on the requirements and get responses from the desired LLMs.

While LLM proxies are a valuable tool for managing interactions with LLMs, they may not be sufficient for complex use cases like advanced features, scalability, and integration with other systems.

LLM gateways, on the other hand, offer a more comprehensive solution by being centralized. For instance, LLM gateway provides a central hub for accessing multiple models with added features like pre-processing and monitoring. Gateways also offer advanced services like governance, scalability, and customization, making them more comprehensive. In short, proxies manage traffic, while gateways integrate models, cloud and storage APIs, and offer broader control.

Typical Architectural Components of an LLM Gateway

Now let’s understand the architecture of the LLM Gateway. Keep in mind that we will be recapitulating some of the terms from the earlier section. But we will learn how these terms function from an application point of view. Also, an LLM Gateway is an advanced version of the proxy, offering centralized control, routing, and management of LLM interactions.

Architecture of the LLM Gateway | Source: Adapted from Tensorop

While the specific architecture of an LLM Gateway may vary depending on the implementation, a typical architecture consists of the following components:

UI+SDK: The UI (User Interface) and SDK (Software Development Kit) allow users and developers to interact with the LLM Gateway. The SDK provides tools to integrate with the gateway’s features programmatically, while the UI enables easier access to the platform’s functionalities, including managing LLM queries, settings, and analytics.API Gateway: The API Gateway or Unified API Layer is the core component of the gateway. It abstracts the communication between the front end (UI/SDK) and multiple cloud-based LLM services (Azure, AWS Bedrock, Google Cloud). It provides a single interface to access different LLMs, ensuring that switching between providers is seamless and without requiring changes to the client code. This layer handles routing, load balancing, and request distribution across multiple LLM providers.3rd Party Service Interaction: The 3rd party services can include any additional features that you want to add like prompt management tools, for example. With the gateway, you can easily integrate tools of your choice. The services can directly interact with the LLM providers with an SDK or with Restful requests.Security: To ensure that only authorized users can interact with the system or access sensitive data the gateway offers Role-Based Access Control. Additionally, the API gateway secures communications between clients, the gateway, and third-party LLM services.Audit: It allows you to track all actions performed on the gateway, including LLM queries, configuration changes, and security events. This auditing ensures accountability and compliance with internal policies or external regulations.SSO: The Single Sign-On (SSO) module allows users to authenticate into the system using a single set of credentials, reducing friction and improving security. This feature is especially useful for enterprise environments where integration with identity providers is critical.Secret Management: The Secret Management feature handles the secure storage and access of sensitive information such as API keys, tokens, and other credentials required for accessing LLM services. It ensures that secrets are encrypted and only accessible by authorized services and users, reducing the risk of data breaches. And the interesting part is all the security management and handling at the proxy level.

How LLM Gateways Fit into the Broader LLM Ecosystem

LLM Gateways serve as an intermediary, enabling seamless integration of multiple LLMs into applications. They provide a centralized solution for managing LLM interactions, allowing enterprises to access different models without significant code changes. Unlike direct API use or simple LLM proxies, gateways add essential features like load balancing, security, and failover, crucial for large-scale deployments.

In the broader ecosystem, gateways enhance scalability and flexibility, making LLMs more accessible to a wider range of applications across industries.

Deployment Options: Containerization & Cloud-Based Architectures

When it comes to deploying LLM Gateways, flexibility is a critical factor. The deployment can be customized based on infrastructure, traffic demands, and operational goals.

Architecture of deploying LLM Gateway in various cloud infrastructures | Source: truefoundry

Here’s an overview of the two common deployment options:

Containerization: By leveraging containerized environments such as Docker and Kubernetes, LLM Gateways can be deployed and scaled horizontally. In this setup, multiple container instances of the gateway can be spun up to handle traffic surges. Kubernetes, for example, ensures that the LLM Gateway can autoscale based on demand, managing resources efficiently. This is particularly useful in high-traffic scenarios where thousands of requests need to be routed to different LLMs in real time. Additionally, containerization allows for easy updates and rollback, ensuring minimum downtime during deployments.Cloud-Based Architectures: Alternatively, organizations can deploy LLM Gateways using cloud platforms like AWS, Azure, or Google Cloud, typically as containers. In this setup, the provider manages key infrastructure aspects such as autoscaling, redundancy, and security, while container orchestration platforms like Kubernetes. This ensures smooth deployment and scaling of the gateway. These platforms offer built-in features such as serverless computing and load balancing, which simplify managing high availability and performance at scale. The cloud also provides integrated security measures, including encryption and compliance management, ensuring that enterprise data remains secure. This adds an extra layer of protection.

Each deployment method has its advantages. Containerization offers tighter control over the infrastructure and can be ideal for specific use cases where the gateway needs to be highly customizable.

Cloud-based architectures, on the other hand, reduce infrastructure management complexity, making them more suitable for companies looking for scalable, managed solutions.

Conclusion

LLM Gateways have emerged as a crucial component in the rapidly evolving landscape of AI and language model integration. They offer a comprehensive solution to businesses and developers when working with multiple LLMs.

Here are the key takeaways:

Simplified Integration: LLM Gateways provide a unified API. This allows seamless access to various LLMs from different providers through a single interface.Enhanced Security and Compliance: By offering centralized authentication, access control, and data handling, gateways significantly improve security measures and help maintain regulatory compliance.Optimized Performance and Cost Efficiency: Through smart routing, caching, and load balancing, gateways enhance application performance while optimizing operational costs.

The implementation of an LLM Gateway offers several advantages:

Streamlined development and maintenance processesImproved service reliability with failover mechanismsSimplified debugging through centralized logging and monitoringBetter visibility into costs and usage patterns

From an architectural standpoint, LLM Gateways build upon the concept of LLM Proxies, offering more advanced features such as routing, security, and analytics capabilities. They typically consist of components like a unified API layer, security modules, and integration with third-party services.

Deployment options for LLM Gateways are flexible, with containerization and cloud-based architectures being the primary choices. These options allow for scalability and customization based on an organization’s specific needs and infrastructure preferences.