Lúí Smyth (Director of Product, Generative & AI Tooling, Shutterstock) gave this presentation at the Computer Vision Festival in August 2023.

Hello, everybody. My name is Lúí, and I’m the Director of Product for Generative and AI at Shutterstock. I’m going to be talking about art in the age of AI, and by the end of this presentation, I hope to have given you a sense of the crazy world that generative AI unlocks and how you can go about navigating that world.

Specifically, I’m going to run through:

How generative AI works at a high levelWhat it unlocksSome of the challenges it poses for us And how we responded to those challenges at Shutterstock

How does Shutterstock leverage AI?

First, a few quick words on Shutterstock itself. We like to say that we’re one platform with 734 million ideas. That’s the number of images we have for sale right now.

And that’s just our image library. We also have music, video 3D, and various other content types.

So you might ask, “Why is Shutterstock talking about AI?” And the answer is actually that we’ve been using AI for years in a ton of different ways. The most obvious is probably our reverse image search. It’s one of the most used features on the site. If you have an image that’s very close to the one you want, you can upload it onto the site and we’ll show you similar images that you can download and license.

We also use AI to classify, score, and understand the images that contributors put up for sale on the site. This helps us surface them in search for the right customers that are looking for those pictures that’ll meet their needs.

In recent years, we’ve become one of the biggest data brokers for computer vision companies looking for large datasets of well-tagged images that are available for licensing.

And of course, we have our own AI image generator for anyone who wants to be able to very easily generate new images and license them with confidence, without having to train to become a prompt engineer.

How does text-to-image generative AI work?

Now, let’s talk about generative AI and how it works.

The first thing to say is that anything that’s represented on a digital screen can be conceived as a mathematical artifact. An image is made up of pixels, and each of those pixels has a color value.

For example, the picture of Abraham Lincoln below can be represented as a matrix of very large numbers.

Now, there are actually lots of different ways you can represent images as numbers, and this isn’t necessarily the one we use when producing models with AI, but it’s a useful way of conceptualizing it.

It’s also important to know that we can represent words and even semantic concepts as very large numbers in different ways as well.

So, to build up a generative model, we create an algorithm that’s able to connect images with the semantic concepts represented in a given image. And we do this by having the model make predictions and then telling it what it got right and what it got wrong.

When it successfully gets a prediction correct, we reinforce the path that led to that prediction, and over many, many, many iterations and lots of data, the AI model learns to very accurately classify what’s in an image.

Now, generative is actually a very similar technology, but in reverse. So, instead of going from image to words, we’re going from words to image.

The way that might look is we take an input, like the word, “cat,” we generate a ton of random sets of pixels, and we say, “No none of these represent ‘cat.’

But maybe we find one that has a slightly more catty feel to it than the others, and we take that and use it as a basis for the next iteration. And we say, “Okay, let’s generate variations on this. And we generate a tonne of them and we say, Okay, no, none of these look like cats. But maybe this one here looks like slightly more catty.”

We do this many, many, many times, and over those cycles, we end up with a beautiful picture of a cat.

Unlocking the vast potential of generative AI

Now, what if you ask for something we’ve never seen before, like a unicorn or a combination of different words?

Well, in the case of a unicorn, I mentioned earlier that we’re able to connect words and semantic concepts and treat them as numbers that are connected to each other in different ways. So we may be able to know that a unicorn is close to similar concepts like horses and single-horned animals, and we may be able to generate something in the right direction.

But generally, it’ll be worse if we don’t have a direct representation as an input. So, suffice to say that to build AI models, we need huge data sets.

Fortunately, that’s something we have at Shutterstock. As I said, we have hundreds of millions of images, and we’re able to use this absolutely vast quantity of inputs because every single image contains lots and lots of information. We’re able to connect them all in ways where you can actually come up with unusual combinations.

It doesn’t have to necessarily be a concept that was represented in the original dataset. It can be a connection of multiple concepts or mashups.

So what does this unlock? What’s enabled by this?

Well, I just took a few hundred recently generated images at random from our library. And it’s so much fun; this is purely a random selection, and the variety, depth, and interestingness of all these different types of images are mind-blowing. It’s chaotic and it’s a lot to take in. It’s impossible to take in. The overall impression is one of absolute abundance, so it’s very hard for us to wrap our heads around this.

One of the ways we can do this is to think about it as a vast, multi-dimensional space, and we can think of all content variations as being mathematical entities within that space. That space is referred to as latent space.

And how big is that space? Well, a 10-character message has 141 trillion possible combinations. Each of these images has far, far, bigger sets of characters or bits, so the latent space used to build these visuals is much, much more vast.

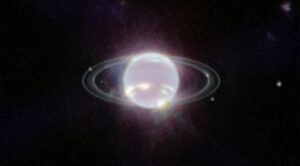

So, there are lots of ways we can think about this. My preferred metaphor is to imagine this as a gas giant, a phenomenally large, planetary object that you’re able to navigate through, and you can move in so many directions.

We can conceptually only think of three dimensions, but just try to imagine you’re in some sort of hyper-dimensional space, and your mission as a creative using generative AI is to dive into the gas giant of potential content.

Your kit list is your navigation spacesuit, which might be the tooling you’re using to navigate generative AI. Your quality assessment goggles are your artistic discernment of the different images. The map of wherever it is you’ve come from (I don’t think you have a map of where you want to go to because you haven’t been there yet), maybe it’s some training as well.

This is a new skill set. It’s a new space, and we try to make it as easy as possible. But there’s a whole road you can go on if you go down the training path.

So, you want a picture of a cat. Well, sure, there’s a quadrillion ways you could represent a cat. But what else can you do in this space? What will you find in there?

Well, you’ll find images that reflect reality. You’ll find images that mask reality. You’ll find images that mask the absence of reality. You’ll find images that bear no relation to reality or unreality whatsoever. And you’ll find images that match together reality and unreality.

So in a world where any combination of any words you can think of can be transformed into pictures, the possibilities are endless.

You can explore and flesh out ideas, you can build storyboards, you can mock up creative campaigns, and you can go down these creative wormholes that you never thought existed. Eventually, we expect to create images that meet any need imaginable.

Dealing with the challenges of generative AI

So what could possibly go wrong? This all sounds great. But what are the challenges?

Well, we’re going to face some big questions in a tonne of different areas around:

Authorship and intellectual propertyBias Truth and authenticity Artistic production Identity

We’re going to face questions like, who’s the originator of this image? Is it the pool of artists that all contributed to the artistic paint style represented? The people that licensed the image to Shutterstock? Is it the artist who created moonscapes and landscapes of different planets? Is it the AI scientists who put together the model?

Is it the person who put in the prompt text that actually generated this picture? Or is it the team that put together the tooling that took that prompt and turned it into something that resulted in a really good image from the actual model?

There’s no easy answer.

Who is this person below? This is an AI-generated image.

Did this happen below? The answer is that it didn’t by any conventional definition. This is an AI-generated image that was in the news. And that’s before we even get into the question of bias.

AI generative models arrive with biases. If you sent in direct quotes to the AI without any kind of reworking in the interest of representation and just said, ‘What is a CEO?’ Or, ‘What is a genius?’ Or, ‘What is a nurse?’ You’d get these sorts of images:

You’re going to get biases around race, gender, orientation, and age. And this isn’t surprising because these reflect the wider societal biases and inequality in our world today.

Shutterstock’s framework for responsible AI

So, how do we respond?

We’re optimists at Shutterstock. We believe that we can face these challenges head-on, enjoy the fruits of this whole new paradigm, and build a world where creators benefit from AI. And we’ve done a lot of work in making this a reality.

This isn’t just lip service; we’ve come up with an action-oriented set of responses.

We make sure we’re only training or using models that have been trained on properly licensed data, and we ensure that our contributors have the right to opt out of that training.

We pay royalties to the artists who make this possible to the best of our ability with the technology as it is today. So that means royalties get aggregated at a pool level. It’s viable to work out for any particular image which was the pool of images that were the discerning inputs that made that possible.

We proactively uplift underrepresented groups with a tonne of safeguards in place around content produced, what kind of prompts we allow, and how those are handled, and we bake in transparency by default.

So to give you a concrete sense of what that looks like, we’ve always been a two-sided marketplace. We bring together Shutterstock contributors who are typically photographers, illustrators, artists, and so on, and we connect them with Shutterstock customers who are typically graphic designers, marketers, solopreneurs, and people in creative departments at big companies.

We connect these two groups with the Shutterstock library. But what does that look like in a generative world?

Well, let’s talk about the first part of the library, that two-sided marketplace.

We license out our library as a training set to responsible computer vision partners, and we actually pay a royalty on this transaction from the get-go. So there’s usually some kind of commercial arrangement here, and that’ll include payment that goes out to the contributor fund, and there’s already been a source of significant royalties for our contributors. This is used to build generative models.

And then for our AI image generator, we take the generative model and equip it with bias mitigation, prompt enrichment, handling of different languages, styles, and so on. Then your user will come along and put in a prompt and they’ll search for something like, ‘massive yak god on Martian landscape’ or ‘cats in pajamas,’ or whatever it might be. And then we’ll generate those images.

One of the things that makes us unique is that we don’t charge our users for generating the pictures themselves. It’s never been how we’ve worked. We’ve always been a library that allows people to explore and find images that meet their needs.

But if one of our customers does find an image that they need, they’ll initially just see it watermarked and they’ll be able to download a full res version, get a commercial license on that, and use one of their Shutterstock credits, which will then result in a royalty payment that goes to the contributor funds.

So that’s how we’ve tackled the question of training data and making sure there’s some sort of payment going through in an aggregated way to the users behind the training data.

There are a tonne of other areas that continue to be challenges around diversity among our content and our creators. We do intervene to balance and improve representations of different underrepresented groups on our platform, but our policies here actually go beyond generative, so we’re striving to enhance and expand the usage of human creative content on the site itself.

We don’t want to replace the whole site with AI-generated content. The picture below isn’t AI-generated. This is something that we think will be a huge part of the mix for the foreseeable future.

Shuttlestock: Championing diversity among content and creators

We’ve created a Create Fund that supports projects that further our commitment to inclusive creativity, namely, sponsoring creators to create content that represents groups that historically don’t get well represented. We’re actively committed to various standards in the industry.

A key thing here is that all these challenges are shared. But that’s particularly true when it comes to issues around safety, transparency, and authenticity. These require a shared cross-industry response.

It’s been really heartening to see enthusiasm within the company and across the sector for proactive measures to face these questions head-on.

Now, that said, we know we don’t have all the answers. So if there’s anyone here that’s working on solutions that may help any of the above, we’d love to hear from you.

If you haven’t already tried generative AI, you can head on over to shutterstock.com/generate, throw in a prompt, and give it a go. It only takes a few seconds, and we’d love to hear your feedback.

Lúí Smyth (Director of Product, Generative & AI Tooling, Shutterstock) gave this presentation at the Computer Vision Festival in August 2023.