Learn about Google’s latest flagship model, “Gemini,” which emerges as a direct competitor to GPT-4, with 5 times the computing resources used for training and multimodal capabilities.

Google’s latest flagship model, codenamed “Gemini,” boasts an astonishing level of power that surpasses GPT-4 by a factor of five and is able to produce text and images!

Scheduled for a public release in December, Gemini emerges as a direct competitor to GPT-4. Many details about it have been spread around the Internet in recent weeks. Those details reveal the enormous power of the new model.

Gemini was created from the ground up to be multimodal, highly efficient at tool and API integrations and built to enable future innovations, like memory and planning.

Sundar Pichai, CEO Alphabet

We’ll share a small part of those details is here.

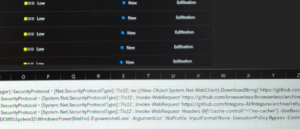

What is the Computational Power used to Train Gemini

Google invested unprecedented computational power to train Gemini, an order of magnitude greater than any seen in history, exceeding GPT-4 by a factor of five!

Achieving this level of computational intensity for a single model remains beyond the capabilities of conventional model training hardware.

The model’s training relied exclusively on Google’s cutting-edge training chips, known as TPUv5. These chips stand as the sole technology capable of orchestrating the substantial parallelism of 16,384 chips working in tandem, a pivotal factor in facilitating the model’s extensive training.

At present, no other entities in the field possess the capacity to undertake such training endeavors.

What Dataset was used to Train Gemini

Information regarding the training dataset is somewhat limited. However, we do know that:

Google possesses an extensive collection of code-only data, estimated at around 40 trillion tokens, a fact that has been verified.This dataset alone is four times larger than the entirety of data utilized for the training of GPT-4, encompassing both code and non-code data.

Following data refinement process, involving filtering, duplicate removal, cleaning, summarization, and noise reduction, the estimated size of the complete dataset stands at approximately 65 trillion tokens. This estimate is derived from the token counts present in data collections that Google had previously employed for training its models.

What are Gemini’s Multimodal Capabilities

What is Multimodal?

Multimodal refers to a learning approach of machine learning models that involves various forms of input data, like text, images, and audio. Each of these data types represents different facets or modalities of the world, reflecting the various ways in which it is perceived and experienced.

Multimodal capabilities have a proven track record of significantly improving the model performance. Achieving synergy between different data types is a challenging task, but it has been successfully demonstrated on multiple occasions.

Google takes this concept to an unprecedented level of sophistication.

Gemini’s Multimodal Capabilities

Gemini can get inputs in the form of text, video, audio, and images. What sets it apart is its ability to not only generate text but also produce images, marking a groundbreaking milestone as the first text generation model with image generation capabilities.

Gemini vs. ChatGPT: The Future of Generative AI

The emergence of Google’s groundbreaking model, Gemini, has ushered in a new era in artificial intelligence. In the showdown of “Gemini Vs. ChatGPT,” it’s evident that Gemini stands as a harbinger of AI’s future. With computational power surpassing its predecessor, GPT-4, by a remarkable factor of five, and the ability to process multimodal data like never before, Gemini presents a formidable competitor. It boasts an astonishing dataset, advanced training techniques, and the capability to generate images alongside text.

In contrast, ChatGPT showcases the significant advancements made in the AI field since its inception. However, it lacks the sheer computational might and multimodal prowess that Gemini embodies.

As we step into this new age of AI, the race for innovation continues, with Gemini paving the way for more powerful, versatile, and context-aware AI models, setting the stage for a future where AI can truly understand and interact with the world in unprecedented ways.